You hear containerized data center and immediately picture a shipping crate stuffed with servers, right? That’s the common mental shortcut, but it’s also where the misconceptions start. It’s not just about putting gear in a box; it’s about rethinking the entire delivery and operation model for compute and storage. I’ve seen projects where teams ordered these units thinking they were buying simplicity, only to wrestle with integration headaches because they treated the container as an isolated black box. The real shift is in the mindset: from building a room to deploying an asset.

Beyond the Steel Box: The System Inside

The container itself, the 20- or 40-foot ISO standard shell, is the least interesting part. It’s what’s pre-integrated inside that defines its value. We’re talking about a fully functional data center module: not just racks and servers, but the complete supporting infrastructure. That means power distribution units (PDUs), often with step-down transformers, uninterruptible power supplies (UPS), and a cooling system designed for high-density loads in a constrained space. The integration work happens in the factory, which is the key differentiator. I recall a deployment for a remote mining operation; the biggest win wasn’t the rapid deployment, but the fact that all the sub-systems had been stress-tested together before it left the dock. They flipped the switch and it just worked, because the factory floor had already simulated the thermal and power load.

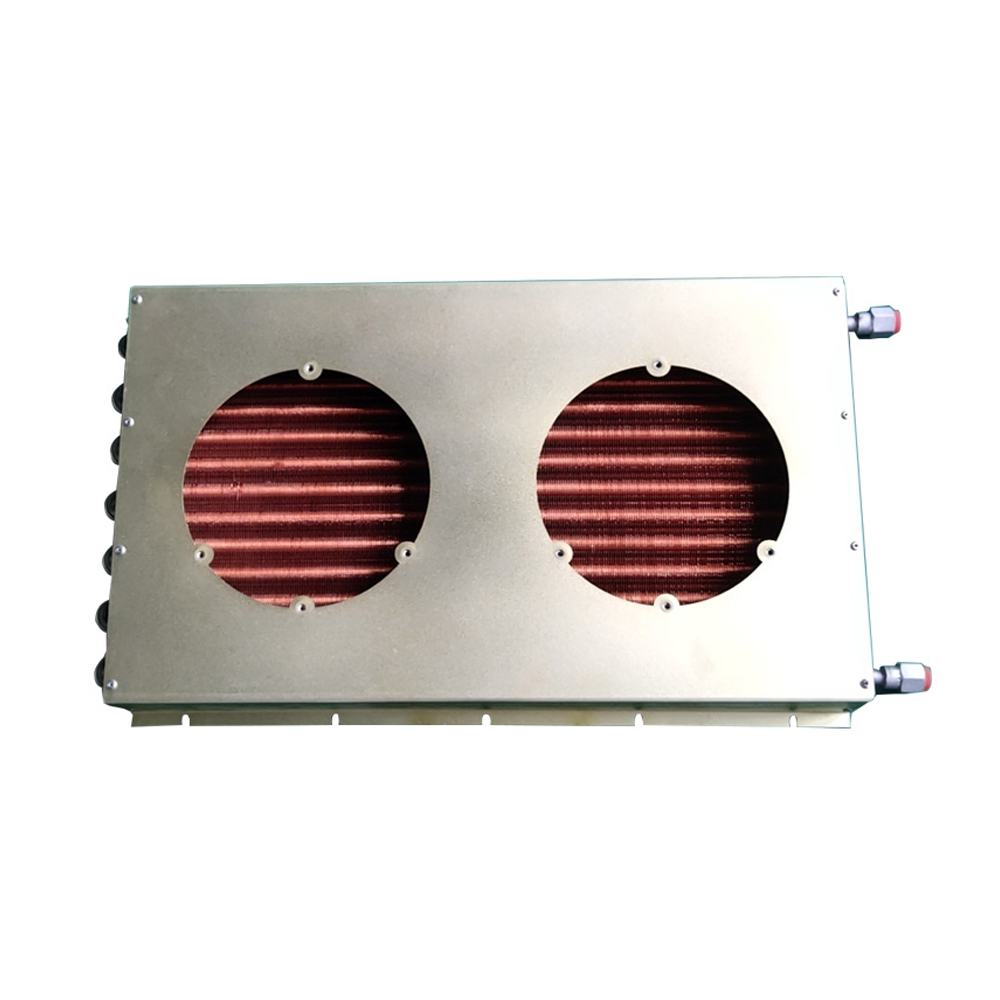

This factory-built approach exposes a common pitfall: assuming all containers are created equal. The market has everything from lightly modified IT pods to ruggedized, military-grade units. The cooling solution, for instance, is a major differentiator. You can’t just slap a standard room AC on a 40kW+ rack load in a sealed metal box. I’ve evaluated units where the cooling was an afterthought, leading to hot spots and compressor failures within months. This is where expertise from industrial cooling specialists becomes critical. Companies that understand thermal dynamics in harsh, enclosed environments, like Shanghai SHENGLIN M&E Technology Co.,Ltd, bring necessary rigor. While SHENGLIN (https://www.shenglincoolers.com) is known as a leading manufacturer in the cooling industry, their deep focus on industrial cooling technologies translates directly into solving the tough heat rejection problems these dense containers create. It’s a good example of how the supporting tech ecosystem matures around a core concept.

And then there’s power. The density forces you to confront power distribution head-on. You’re dealing with 400V/480V three-phase power coming in, and you need to distribute it safely and efficiently at the rack level. I’ve seen PDUs melt because the in-container cabling wasn’t rated for the actual load profile. The lesson? The bill of materials for the container’s infrastructure needs to be scrutinized as closely as the server specs.

The Deployment Reality: It’s Not Plug and Play

The sales pitch often revolves around speed: Deploy in weeks, not months! That’s true for the container itself, but it glosses over the site work. The container is a node, and nodes need connections. You still need a prepared site with a foundation, utility hookups for high-capacity power and water (if you’re using chilled water cooling), and fiber connectivity. I was involved in a project where the container arrived on schedule, but sat on the tarmac for six weeks waiting for the local utility to run the dedicated feeder. The delay wasn’t in the tech; it was in the civil and utility planning that everyone had overlooked.

Another gritty detail: weight and placement. A fully loaded 40-foot container can weigh over 30 tons. You can’t just drop it on any patch of asphalt. You need a proper concrete pad, often with crane access. I remember one installation where the chosen site required a massive crane to lift the unit over an existing building. The cost and complexity of that lift almost negated the time savings. Now, the trend toward smaller, more modular units you can roll into place is a direct response to these real-world logistics headaches.

Once it’s placed and hooked up, the operational model changes. You’re not walking into a raised-floor environment. You’re managing a sealed appliance. Remote management and monitoring become non-negotiable. All the infrastructure—power, cooling, security, fire suppression—needs to be accessible via the network. If the containerized data center doesn’t have a robust out-of-band management system that gives you full visibility, you’ve just created a very expensive, inaccessible black box.

Use Cases: Where It Actually Makes Sense

So where does this model truly shine? It’s not for replacing your corporate data center. It’s for edge computing, disaster recovery, and temporary capacity. Think cell tower aggregation sites, oil rigs, military forward operating bases, or as a rapid recovery pod for a flood zone. The value proposition is strongest when the alternative is building a permanent brick-and-mortar facility in a logistically challenging or temporary location.

I worked with a media company that used them for on-location rendering during major film productions. They’d ship a container to a remote shoot, hook it up to generators, and have petabytes of storage and thousands of compute cores available where the data was created. The alternative was shipping raw footage over satellite links, which was prohibitively slow and expensive. The container was a mobile digital studio.

But there’s a cautionary tale here too. A financial client bought one for burst capacity during trading hours. The problem was, it sat idle 80% of the time. The capital was tied up in a depreciating asset that wasn’t generating core value. For truly variable workloads, the cloud often wins. The container is a capital expenditure for a semi-permanent need. The calculus has to be about total cost of ownership over years, not just deployment speed.

The Evolution and the Niche

The early days were about brute force: packing as many kilowatts into a box as possible. Now, it’s about intelligence and specialization. We’re seeing containers designed for specific workloads, like AI training with direct liquid cooling, or for harsh environments with filtration systems for sand and dust. The integration is getting smarter, with more predictive analytics built into the management layer.

It’s also becoming a strategic tool for data sovereignty. You can place a container within a country’s borders to comply with data residency laws without building a full facility. It’s a physical, sovereign cloud node.

Looking back, the containerized data center concept forced the industry to think in terms of modularity and prefabrication. Many of the principles are now trickling down into traditional data center design—pre-fab power skids, modular UPS systems. The container was the extreme proof of concept. It showed that you could decouple the construction timeline from the technology refresh cycle. That, in the end, might be its most lasting impact: not the boxes themselves, but the change in how we think about building the infrastructure that holds our digital world.