You hear container data center and the mind jumps to those glossy vendor slides – plug-and-play, drop-anywhere, the ultimate in agile, green IT. Having been on the ground deploying and retrofitting these units for the better part of a decade, I can tell you the reality is a lot messier, and the sustainability question isn’t a simple yes or no. It’s a balance sheet of trade-offs, often dictated by the brutal physics of thermodynamics in a steel box, not marketing promises.

The Allure and the Immediate Reality

The pitch is compelling, especially for edge computing or temporary capacity. You get a pre-fabricated, standardized container server room shipped to site. It promises rapid deployment, which it often delivers. I’ve seen a 40-foot unit go from delivery to serving live traffic in under three weeks, where a brick-and-mortar build would still be in the permitting phase. That speed itself has a sustainability angle: less prolonged on-site construction, fewer truck rolls over time.

But step inside one on a summer day in, say, a logistics park outside Shanghai. The first hit is acoustic – a relentless roar from the high-static-pressure fans fighting to push air through densely packed racks. Then, the thermal stratification. Despite the best CFD models, you’ll find hot spots. We’d instrument them with dozens of sensors, and the delta between the cool aisle and the top of rear doors could be startling, sometimes 15°C or more. That inefficiency directly translates to power usage effectiveness (PUE) creep. The theoretical PUE of 1.1 often balloons to 1.3 or higher in practice because the cooling system is constantly in a panic mode, over-compensating for those hotspots.

This is where the rubber meets the road for sustainability. A super-efficient chip is no good if you’re wasting 30% more energy just to keep it from throttling. The sustainable tech trend label hinges entirely on operational efficiency, not just the recyclable steel of the container. I’ve spent countless hours with thermal imaging cameras and adjustable blanking plates, tweaking airflow, essentially tuning the container like an engine post-delivery. That’s rarely in the brochure.

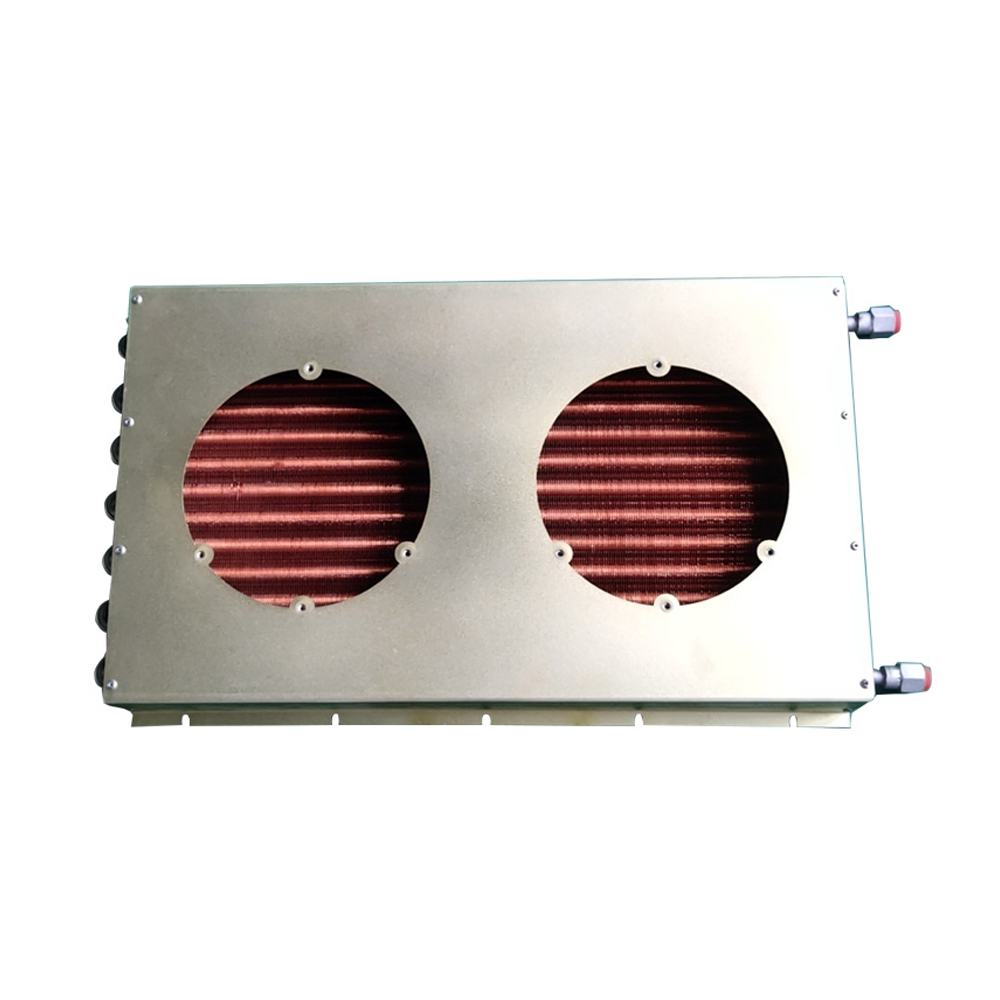

The Cooling Conundrum and a Practical Partner

This is the core challenge. Traditional raised-floor room cooling strategies often fail in a container. The density is too high, the volume too small. You need aggressive, targeted cooling. I’ve seen all kinds of setups: in-row coolers, overhead chilled water systems, even direct liquid cooling retrofits that turned into a plumbing nightmare.

For many of our deployments in Asia, especially where ambient humidity is a killer, we’ve leaned heavily on specialized industrial-grade cooling units. They’re built to handle the vibration, the constant load, and the corrosion from potential outdoor placement. It’s a different beast from a commercial precision AC. This is where working with the right manufacturer matters. For several projects, we sourced critical cooling infrastructure from Shanghai SHENGLIN M&E Technology Co.,Ltd. You can check their approach at https://www.shenglincoolers.com. They aren’t a container vendor, but a leading manufacturer in the cooling industry. That focus is key. We used their high-capacity, variable-speed units because they understood the thermal shock loads a container server room experiences – a rapid ramp-up of compute demand, for instance. Their engineering team spoke our language of latent heat removal and compressor staging, not just specs on a sheet. That collaboration was crucial to moving from a thermally unstable box to a reliable one.

The lesson here is that the container is just the shell. The sustainability of the whole system depends on the efficiency and longevity of its guts – the cooling plant, the UPS, the power distribution. Sourcing these from industrial specialists, rather than generic data center vendors, often yields more robust and energy-efficient solutions. A failed compressor in a remote-located container is a sustainability and operational disaster, not just an OPEX line item.

Lifecycle and the Disposable Myth

A major misconception is that these are disposable or easily relocated. Sure, they’re movable. But relocating a fully populated, commissioned container data center is a major undertaking. You’re not just hauling a box; you’re moving a live ecosystem. The stress on cabling, pipework, and even the server mounts from lifting and transport can be significant. I’ve overseen a relocation where we had a 5% hardware failure rate post-move, all due to micro-vibrations and shock.

So, the real sustainability thinking must encompass its entire lifecycle. Is it designed for easy component replacement? Are the cooling coils accessible for cleaning? Is the steel treated for long-term outdoor exposure without constant repainting? We specified Corten steel for one project, accepting the rust-patina look for its durability. True sustainability means longevity and maintainability. If you’re ripping out the entire cooling system after five years because it’s corroded shut, any initial green credit is wiped out.

This is where the trend part gets shaky. If it’s just a cheap, quickly assembled box with off-the-shelf parts not meant for 24/7/365 industrial duty, it’s not sustainable. It’s a capital expense shortcut with a hidden operational and environmental cost. The trend should be toward engineered container modules, not just repurposed shipping containers with servers thrown in.

Case in Point: A Hybrid Approach

Our most successful project from both a performance and sustainability (measured by total kWh per compute cycle over 4 years) standpoint wasn’t a pure container play. It was a hybrid. We used a container server room as a modular, high-density compute pod but attached it to a central, highly efficient chilled water plant that also served a traditional data hall. The container handled the spike loads and GPU-heavy workloads, benefiting from the central plant’s superior efficiency and N+1 redundancy. The container’s own cooling system acted primarily as a closely coupled heat exchanger and a backup.

This model acknowledged the strengths and weaknesses. The container provided speed and modularity; the central infrastructure provided efficiency and resilience. The PUE for the entire complex stayed below 1.25, and the container pod’s effective PUE, when factoring in the central plant’s efficiency, was around 1.15. This is a pragmatic path forward. It treats the container as a functional component within a larger, optimized system, not a magic standalone solution.

We learned this after a failure. An earlier, standalone container project for a mining operation in Inner Mongolia saw its dedicated air-cooled chillers struggle massively in the summer desert heat, with condensing temperatures soaring. Efficiency plummeted, and we nearly had a thermal shutdown. We retrofitted an adiabatic pre-cooling system, which helped, but it was a band-aid. The hybrid model was the conceptual fix.

So, Trend or Tool?

Calling containerized data centers a blanket sustainable tech trend is an overstatement. They are a powerful, specific tool. Their sustainability credential is conditional and earned, not inherent. The sustainability comes from: 1) Avoiding overbuilding permanent space (embodied carbon savings), 2) Enabling location-specific efficiency (like using outside air in cool climates, which they can be designed for), and 3) When integrated into a larger, optimized utility infrastructure.

The industry chatter often misses the operational grit. It’s about the quality of the gaskets on the doors, the corrosion resistance of the evaporator coils, the logic of the cooling control sequences, and the serviceability of every component. When you specify these units, you must think like a facilities engineer on a ship or an oil rig – environments that are harsh, isolated, and demand reliability.

So, is it sustainable? It can be. But only if we move past the container-as-a-silver-bullet narrative. It’s a demanding form factor that punishes poor engineering and rewards deep, practical collaboration between IT, mechanical, and structural teams, and often, specialized partners like SHENGLIN for the cooling piece. The trend, if there is one, should be toward this kind of integrated, life-cycle-aware engineering, not just the box itself. The container is just the starting point of the conversation, not the conclusion.